-

Notifications

You must be signed in to change notification settings - Fork 487

Feat/tui interface #995

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Feat/tui interface #995

Conversation

- Add lmms_eval/tui/ module with Textual-based TUI app - Support model selection, task selection, and settings configuration - Add --tui flag to launch interactive mode - Add lmms-eval-tui entry point - Add textual as optional dependency [tui]

- Move decord/eva-decord to [video] optional dependency - eva-decord only for macOS Python < 3.12 (no wheels for 3.12+) - Base package now installable on all platforms

|

Claude finished @Luodian's task in 2m 20s —— View job Code Review Complete ✅Reviewed PR #995: TUI Interface Implementation This is an impressive feature addition that adds a Terminal User Interface (TUI) for interactive configuration of lmms-eval. Here's my comprehensive review: 🎯 OverviewStrengths:

Critical Issues to Address: 1. Files in Wrong Location

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

💡 Codex Review

Here are some automated review suggestions for this pull request.

Reviewed commit: 61c3158abc

ℹ️ About Codex in GitHub

Your team has set up Codex to review pull requests in this repo. Reviews are triggered when you

- Open a pull request for review

- Mark a draft as ready

- Comment "@codex review".

If Codex has suggestions, it will comment; otherwise it will react with 👍.

Codex can also answer questions or update the PR. Try commenting "@codex address that feedback".

| #!/usr/bin/env python3 | ||

| # -*- coding: utf-8 -*- | ||

| """ | ||

| UI/UX Pro Max Core - BM25 search engine for UI/UX style guides | ||

| """ |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

core package

Adding a top-level core.py introduces a name collision with the core package that the PLM model imports (from core.args ... in lmms_eval/models/simple/plm.py). When running from the repo root (or any cwd on sys.path), Python will resolve core to this new module, which is not a package, and core.args will fail with ModuleNotFoundError: 'core' is not a package. That makes --model plm unusable. Renaming/moving this helper module (e.g., under lmms_eval/ or a less generic name) avoids breaking the existing model import path.

Useful? React with 👍 / 👎.

lmms_eval/tui/app.py

Outdated

| text=True, | ||

| bufsize=1, | ||

| env=env, | ||

| shell=True, | ||

| start_new_session=True, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The TUI executes the composed command with shell=True (defaulting to /bin/sh on Linux), but the default activate_cmd is source .venv/bin/activate, which is a bashism and is not supported by /bin/sh on common distros (e.g., dash). That means the default “START” action fails immediately unless the user manually edits the activation command. Consider using a POSIX-compatible activation (. .venv/bin/activate) or running the shell with executable='/bin/bash' to make the default work.

Useful? React with 👍 / 👎.

|

I turn off my opencode's auto formatter lol since we have our own pre-commit link hook to do so. |

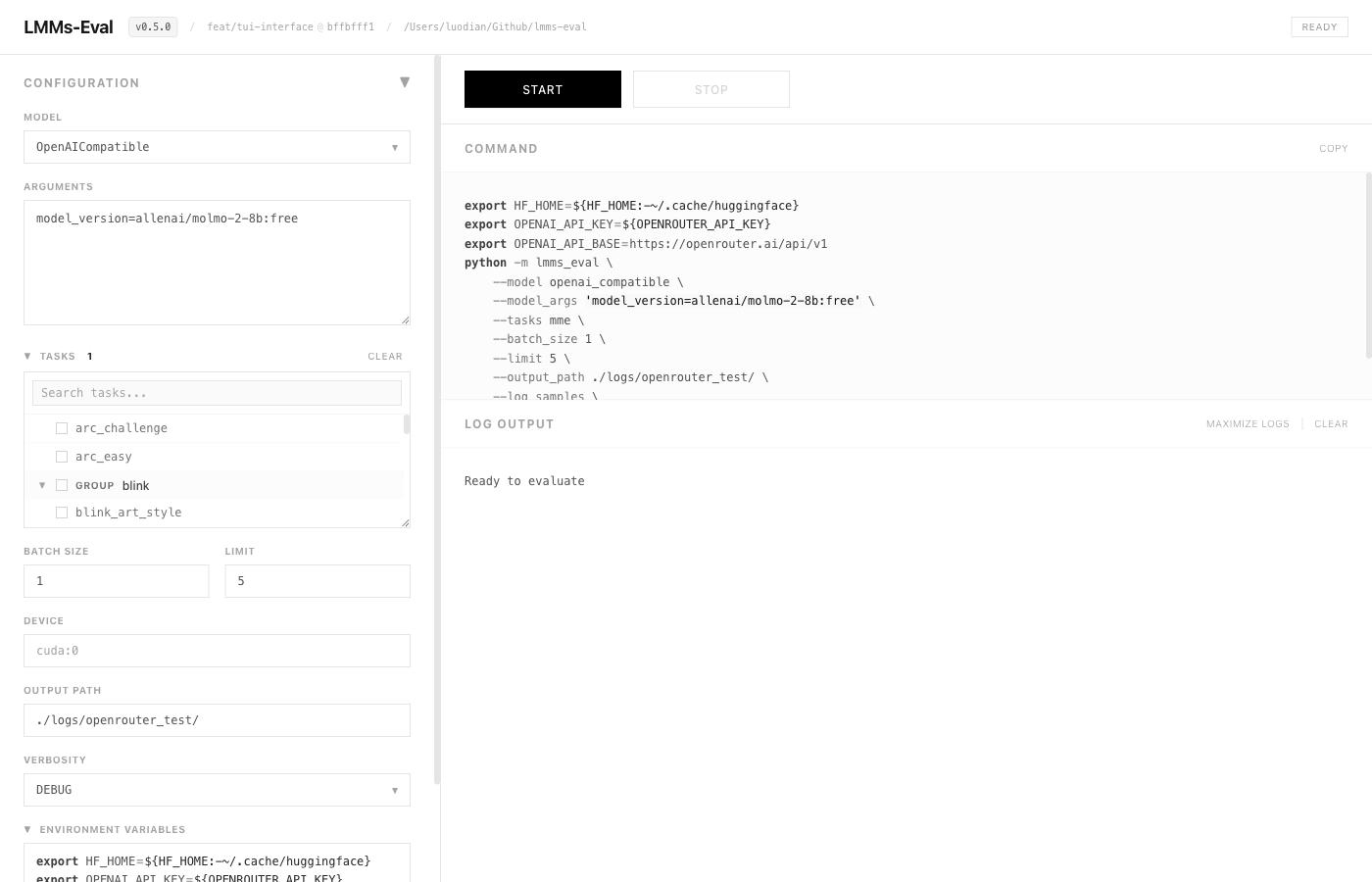

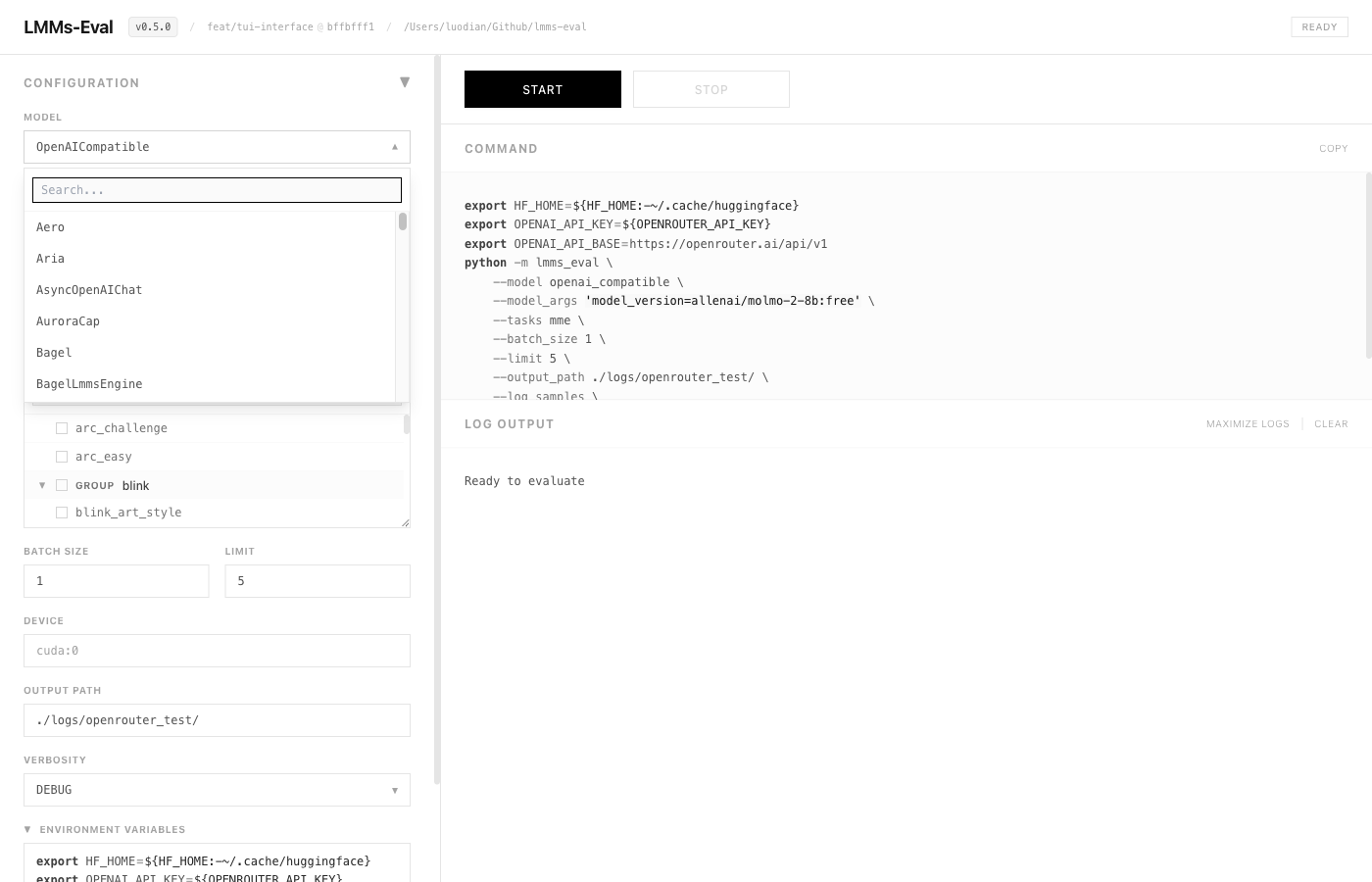

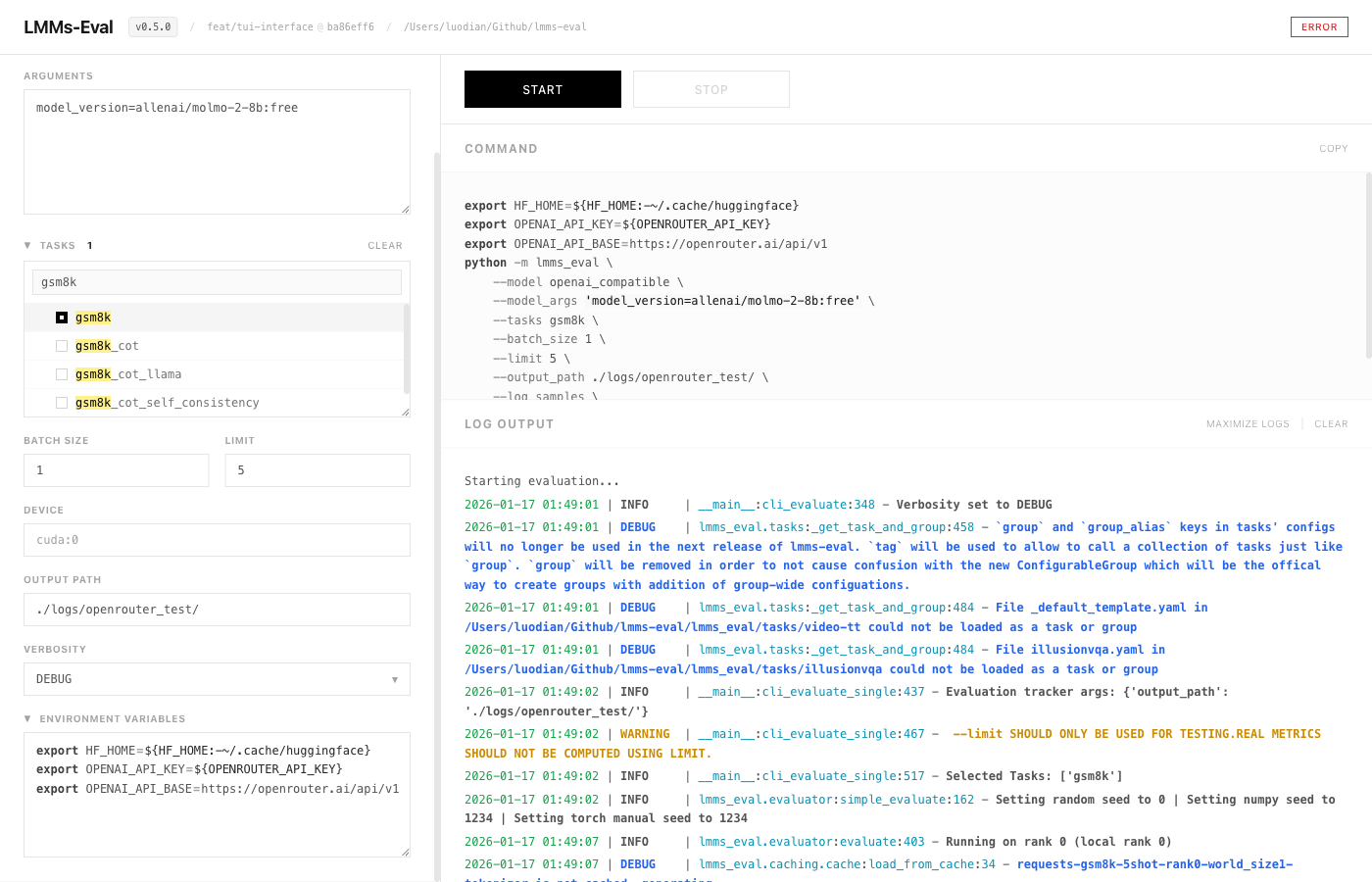

- Remove OpenTUI terminal-based UI (had rendering issues) - Add React + Vite + Tailwind CSS web UI - FastAPI backend serves both API and static files - CLI starts server and opens browser automatically Features: - Model selection dropdown - Task list with search/filter and checkboxes - Real-time command preview - Live output streaming via SSE - Start/Stop evaluation controls - Settings: batch size, limit, device, verbosity, output path

e4a0ccf to

d4c448e

Compare

- Add shell syntax highlighting for command preview and env vars editor - Add ANSI color code parsing for log output - Make Tasks and Environment Variables sections collapsible - Unify typography with monospace font and consistent sizing - Add search functionality to Select dropdown component - Remove broken INVERT button - Add group collapse/expand controls in task list - Make log output maximizable

Before you open a pull-request, please check if a similar issue already exists or has been closed before.

When you open a pull-request, please be sure to include the following

If you meet the lint warnings, you can use following scripts to reformat code.

Ask for review

Once you feel comfortable for your PR, feel free to @ one of the contributors to review

General: @Luodian @kcz358 @pufanyi

Audio: @pbcong @ngquangtrung57

Thank you for your contributions!